Click here to read the article about creating ELA.

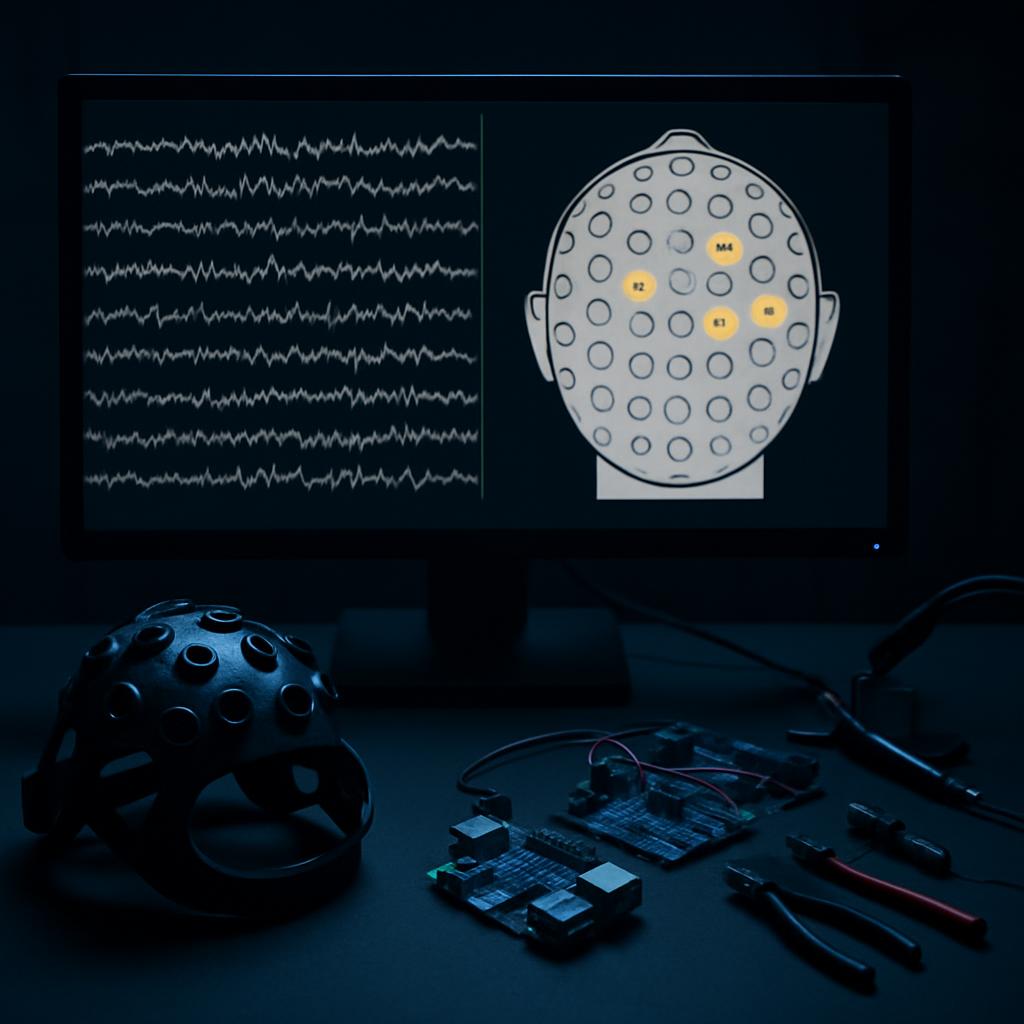

This phase of the project focused on extending my humanoid robot into the biomedical engineering domain through the integration of a Brain-Computer Interface (BCI). Using a non-invasive Emotiv EEG headset, ELA became a platform for studying how neural signals can be translated into safe, intentional physical actions – a core challenge in modern biomedical engineering.

Biomedical Motivation

In many clinical scenarios, patients retain cognitive intent but lose voluntary motor control due to conditions such as spinal cord injury, stroke, or neurodegenerative disease. Biomedical engineering solutions aim to restore this lost link between the brain and the physical world.

By integrating a BCI, this project directly addresses that challenge by converting brain activity into robotic motion without relying on muscular input.

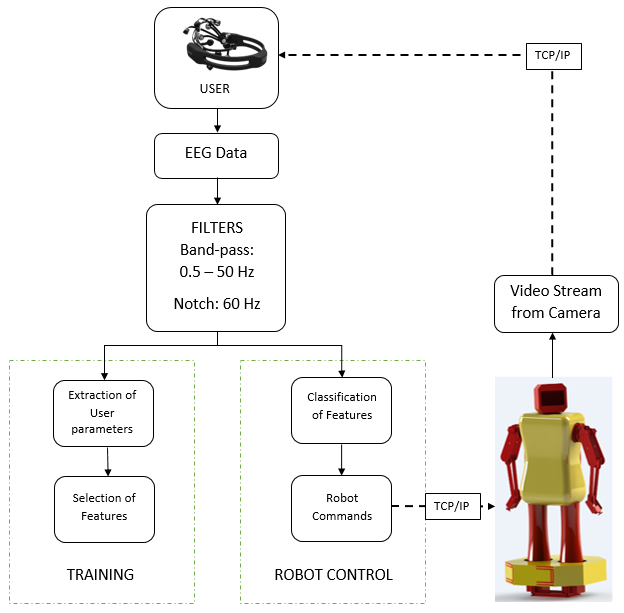

BCI-Centered System Architecture

The system was designed to reflect architectures commonly used in biomedical assistive devices:

Separating high-level neural interpretation from low-level actuation improves reliability and mirrors design practices in medical-grade systems.

At the time when I worked on this project, there were no open source alternatives to the Emotiv headset for EEG capture. Trying to reverse engineer the headset to get raw data would involve ripping it apart to expose the internals and redesigning new electronics – a whole other project in itself.

There have since been more development in the field and more open source headsets are available for research now!

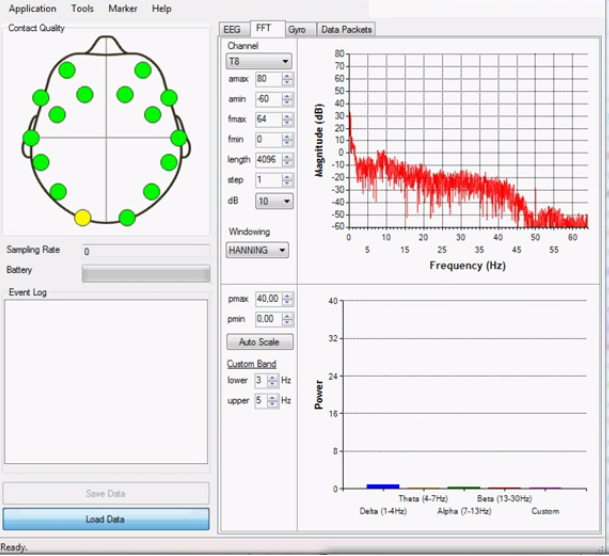

Neural Signal Processing and Control

EEG data from the Emotiv headset is inherently noisy and user-dependent. To ensure robustness and user safety, I implemented:

Recognized neural patterns are translated into predefined actions such as wheeled movement or 6-DOF arm gestures, reducing cognitive load and improving repeatability.

Biomedical Applications

The integrated BCI enables several biomedical use cases:

The robot’s LCD face provides immediate visual feedback, reinforcing neural-action association – an important factor in rehabilitation systems

Engineering Constraints and Clinical Relevance

Using a consumer-grade EEG device highlights key biomedical challenges:

Addressing these constraints reinforced the importance of safety, redundancy, and simplicity in biomedical system design.

Final Thoughts

Integrating a Brain–Computer Interface into this humanoid robot transformed the project into a practical biomedical engineering experiment. The system achieved a command classification accuracy above 75%, demonstrating that reliable intent-based control is possible using non-invasive, consumer-grade EEG hardware.

While not a clinical device, the project reflects real biomedical engineering principles: safe system partitioning, robust signal processing, and user-centered feedback. It provides a strong foundation for future work in assistive robotics, prosthetic control, and neurorehabilitation technologies.